Drive tangible business outcomes with data and analytics at every opportunity. Differentiate your products, services and customer experiences and surpass your competition with an insight-based approach.

Lock or Unlock the future?

Can the technologies presented here put us on the path to a better future? Or do they risk leading us down the wrong road? A look at the perspective of our experts.

On the right path…

The emergence of numerous technologies that smooth out the technical complexities associated with data as well as the multiplication of tools accessible to non-specialists can give a tremendous boost to the culture and use of data in companies. This can of course help companies to make more informed choices, to become more transparent, to implement more sustainable models and to better understand and reduce their negative externalities. Data will be as important for the energy transition as it is for the digital transformation.

… or the wrong path?

The increased use of data and artificial intelligence also brings new ethical and societal responsibilities. The issues of security, privacy, inclusiveness, explicability and fairness of algorithms, and social impact will become central. Without strong values and institutionalised safeguards, abuses can occur very quickly. The venal exploitation of personal data, the manipulation of public opinion, systematic discrimination and mass surveillance are not science fiction topics, but current affairs.

Data-driven Intelligence

Data-driven Intelligence

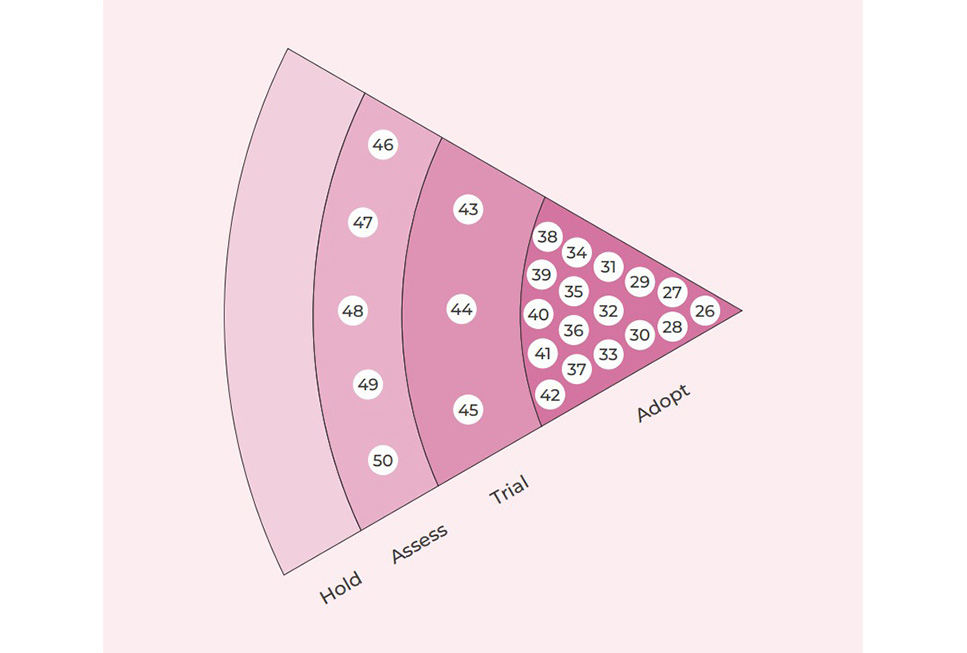

- Adopt: 26. Alteryx 27. Apache Kafka 28. Apache Spark 29. AutoML 30. Azure Synapse Analytics 31. Databricks 32. Datamesh 33. Delta Lake 34. Google Data Cloud 35. Informatica 36. Kubeflow 37. Looker 38. Microsoft Power BI 39. Qlik 40. Snowflake 41. Tableau 42. Talent

- Trial: 43. Airbyte 44. AWS QLDB 45. Dbt

- Assess: 46. Alation 47. Anomalo 48. Collibra 49. Dataiku 50. Fivetran

- Airbyte, Trial

The expensive and complex maintenance of connectors, the linchpins of all data infrastructures, puts companies at the mercy of the proprietary strategies of data extraction (ETL) and ingestion (ELT) software vendors. For Airbyte, the solution is open source. The company provides a whole platform that makes it much easier to synchronise data between systems and databases, running on connectors and within Docker containers. These connectors are certified by Airbyte before being made available to users. In addition, developers are interested in the proper maintenance of the plugs, which guarantees their robustness and stimulates the community. Thanks to this model, Airbyte has been a resounding success: three rounds of funding and more than a hundred connectors created in just one and a half years of existence.

- Alation, Assess

With its flagship product, Data Catalog, Alation aims to help companies develop the culture and use of data within their organisation. To achieve this, Data Catalog offers functionalities for data discovery, governance, understanding and sharing. In particular, Data Catalog combines artificial intelligence and human expertise to make data explicit, assess its quality, understand its origin and meaning, and thus enable all business and IT stakeholders to access it more easily and use it with greater confidence. Alation has been named one of the leaders in data governance by Forrester and has a number of partnerships, including Snowflake, Tableau and AWS.

- Alteryx, Adopt

As the use of data becomes more widespread in the enterprise, working manually with Excel spreadsheets or requesting IT support whenever a report needs to be created or modified are becoming decreasingly viable options. With Alteryx, business users can prepare their data autonomously, build their processes and automate them through a graphical interface requiring no lines of code. Extremely powerful and easy to use, Alteryx enables them to save considerable time and to be independent in the use of data. Founded in 1997 under the name SRC, the company became Alteryx in 2010 and has since accelerated its development, notably by forging partnerships with UiPath, Snowflake, Tableau and AWS.

Discover our consultants favourite tips and tricks for building production-ready workflows in Alteryx that run smoothly and error free.

- Anomalo, Adopt

Ensuring data quality is crucial, but it is a tedious and increasingly unsustainable task as volumes increase. This is why Anomalo aims to automate it. Thanks to the indicators (freshness, completeness, homogeneity) and the reports provided by the solution, anomalies can be identified and corrected before they disrupt the whole analytica chain. The importance of the challenge and the originality of Anomalo’s approach, with no competitor in this niche domain, have enabled it to attract substantial investment. However, it still has to prove itself and the absence of documentation and a trial version for the time being makes us cautious. Nevertheless, it remains a supplier to watch out for, with which to establish relationships, and whose solution

should be evaluated as early as possible. If it fulfils its promises, it will be a tool that all companies will require.

- Apache Kafka, Adopt

Apache Kafka is a data and event streaming platform based on a publish-and-subscribe model. Thousands of organisations use it to exchange data between applications and to build data pipelines for real-time analysis, microservice communication and critical applications such as monitoring and fraud detection. Its distributed architecture guarantees the very high performance, availability and elasticity required for such uses. Created by LinkedIn, Kafka is today a major open source project. Unique on the market for its functionalities and the extent of the possibilities it offers, it is essential for companies that handle large volumes of data. However, it remains a complex tool and its potential should be evaluated before considering its generalisation.

- Apache Spark, Adopt

Apache Spark is an open source distributed computing framework that delivers unparalleled performance for processing very large volumes of data. Supported and used by the biggest tech players Apache Spark is compatible with most programming languages. It is indispensable for large-scale data analysis, for processing massive data streams, and for machine learning and for large-scale data operations (aggregation from countless sources, migrations). Apache Spark is also designed to provide elasticity, availability and protection against data loss. However, it requires advanced skills and heavy infrastructure (although the cloud can significantly reduce the cost). While there are alternatives better suited to certain use cases, Apache Spark is a fundamental technology for all larger organisations.

- AutoML, Adopt

AutoML’s tools aim to automate machine learning so that people without data science skills (analysts or developers, for example) can still exploit its potential. Based on artificial intelligence, they take care of some of the complex and tedious steps of the data science process (data preparation, attribute selection, model selection, result evaluation…). AutoML makes it possible to industrialise the use of Machine Learning for classic use cases such as classification, regression, forecasting or image recognition. All the major platforms (AWS, Azure, GCP, IBM) have offerings in this field, where many highly innovative specialists such as Dataiku, DataRobot or H2O.ai also excel.

- AWS QLDB, Trial

AWS QLDB (Quantum Ledger Database) is a cloud-based registry database that provides a transparent, unalterable, cryptographically verifiable log of mutations. As with the blockchain, each new entry adds a record to the history that cannot be erased or modified, and whose author is irrefutably identified. The difference is that the ledger relies on a centralised authority (AWS) and not on a decentralised mechanism, which can be complex and expensive to implement. For example, QLDB allows the Swedish bank Klarna to establish a complete history of financial transactions or the British vehicle registration office to keep a list of the owners of each vehicle. These references attest to the robustness of the solution and its interest for use cases requiring complete and rigorous traceability.

- Azure Synapse Analytics, Adopt

Azure Synapse Analytics is an integrated analytical solution for Big Data in PaaS mode. Behind devo.team/techradar 43 DATA-DRIVEN INTELLIGENCE a unified interface (Synapse Studio), Azure Synapse Analytics combines a data warehouse, a distributed query engine (Synapse SQL), a mass processing engine (Apache Spark) and a data loading tool (Synapse Pipeline), all with the convenience, elasticity and performance of the cloud. Naturally adapted to work with Microsoft solutions (PowerBI, for example), Azure Synapse Analytics is particularly intended for medium to large companies with advanced Business Intelligence needs (reporting, data discovery, predictive analysis, etc.) and optimised for data volumes of the order of several terabytes.

- Collibra, Assess

Too often, data governance comes up against the priorities of everyday life. If it is not to remain a theoretical process, it must be equipped in such a way as to facilitate its implementation and make its benefits obvious: unified, clearly referenced, reliable, secure, compliant and with easily accessible data. This is the purpose of Collibra’s Data Intelligence Cloud platform. It centralises all information relating to data and its governance (catalogue, rules, lineage, quality, etc.), simplifies the tasks of the various players (data owners, data stewards, data scientists, business users, etc.) and encourages their collaboration. As a partner of Devoteam, which has around 20 certified employees, the Belgian-based publisher has established itself as a leader in this key area, which is at the heart of the repositioning of companies around data. Even to the extent that companies like Google and Snowflake are now investors.

- Databricks, Adopt

In 2013, the creators of the highperformance analytics engine Spark founded Databricks to enable enterprises to leverage its entire power. Based in the cloud and tightly integrated with AWS, Azure and GCP, the Databricks data platform offers a high-performance, flexible and scalable solution for big data, advanced analytics, artificial intelligence and streaming. To sum up its approach, the vendor advocates the “lakehouse” approach, a combination of the robustness of a data warehouse and the flexibility of a datalake. The platform is tailored to specialised users (data engineers, data scientists, etc.) and to organisations facing complexity and/or large volumes. Currently valued at $38 billion, Databricks continues to innovate rapidly to expand its offering and consolidate its position as a major player in data.

- dbt, Trial

An acronym for “Data Build Tool”, dbt is a solution that focuses on the T of ETL/ELT processes, i.e. the transformation phase in which data is formatted for use. Integrating 44 TechRadar | Data-driven Intelligence with most data warehouses on the market through standard connectors, dbt enables data analysts and data engineers to build and maintain data pipelines easily and flexibly despite the increasing complexity of the underlying business logic. To achieve this, dbt is based on the SQL language and draws on concepts from application development (CI/CD, version control, testing, code reuse, etc.). Much more flexible and easier to use than traditional ETL tools, dbt enables small data teams to respond to the explosion of requests to create or modify data flows.

- Dataiku, Assess

Dataiku is a French company that offers an end-to-end solution for data projects, particularly artificial intelligence initiatives. Around a centralised platform, Data Analysts, Data Scientists and Data Engineers can collaborate to govern, connect and prepare data, and build analysis, visualisation and machine learning models. Dataiku’s lightweight and user-friendly solution is well suited to mid-sized companies that want to accelerate the use of data, foster collaboration between the teams involved, and take advantage of artificial intelligence without having to migrate their data infrastructure to the cloud. Dataiku is positioned by Gartner as a market leader in data science and machine learning platforms, but faces strong competition.

- Data mesh, Adopt

By breaking down the capacity limits of data warehouses, the cloud has also exacerbated the rigidities inherent in traditional, monolithic and centralised architectures. To bring more agility to data platforms, the data mesh proposes a distributed approach (comparable to microservices in the application domain). The data is gathered by domain (business, technical, geographical, etc.), whose management is entrusted to independent and autonomous teams. Each domain becomes a product (or may host several products), which its managers strive to make attractive, useful and convenient for its users. This ultimately favours the democratisation of data. However, the implementation of an overall governance and of common tools is essential for the success of this innovative approach, which could revolutionise the culture and use of data within organisations.

- Delta Lake, Adopt

A solution created by Databricks and now open source under the Linux Foundation, Delta Lake is a structured storage layer that overlays the data lake to ensure that the data has the reliability needed for analytics and artificial intelligence. To achieve this, Delta Lake adds the ACID (atomicity, consistency, isolation, durability) transactions found devo.team/techradar 45 DATA-DRIVEN INTELLIGENCE in data warehouses, large-scale metadata management, and data versioning. Compatible with the Apache Spark engine, Delta Lake can be deployed on-premises or in the cloud on most platforms. Already used by thousands of companies, Delta Lake is the new standard for Big Data storage.

- Fivetran, Assess

In less than ten years of existence, Fivetran has established itself through its innovations in a data integration market largely dominated by established players. Fivetran is a cloud-based ELT (Extract, Load, Transform) platform that enables the creation, automation and security of data pipelines from any source to data warehouses in the cloud (BigQuery, Redshift, Synapse, Snowflake…). Fivetran’s strength is its no-code approach, coupled with a very large number of standard connectors, which allows reliable data flows to be set up in a very short amount of time. This efficiency makes it an ideal solution for very large organisations, or possibly smaller ones that want to take advantage of rapidly growing data volumes without delay.

- Google Data Cloud, Adopt

Google Cloud brings together services and tools to build its end-to-end data platform on GCP. These include the BigQuery data warehouse, the Looker data visualisation tool, the Vertex AI platform, and a plethora of pre-trained Machine Learning models. Forming a coherent ecosystem, these various solutions integrate seamlessly with each other while respecting open source standards, allowing them to be easily combined with, or substituted for, third-party tools. On the back end, GCP guarantees a very high level of performance at all scales thanks to its considerable storage and computing capacities. Google Cloud makes it easy and cost-effective to get started with data and provides the same tools used by leading data-driven companies such as Netflix, Spotify and Twitter.

- Informatica, Adopt

Founded in 1993 at the dawn of data warehouses and the historical leader in data integration, Informatica has reinvented itself in recent years to become a specialist in data management in the cloud. Today, the provider offers a SaaS platform based on an artificial intelligence engine that covers all the issues associated with data: integration, quality, repository (MDM), governance, anonymisation, confidentiality, etc. In a crowded data ecosystem, the functional coverage and maturity of Informatica’s Intelligent Data Management solution is unparalleled. Although it is relatively high-priced, Informatica’s tools are a safe choice for enterprises that need to leverage significant volumes of data despite numerous, heterogeneous, and siloed sources.

- Kubflow, Trial

From data collection to model development, from training to deployment and maintenance, Machine Learning (ML) projects proceed through iterations that involve data scientists, data engineers, ops engineers, and more. In order to deliver more reliable models faster, it is essential to industrialise this workflow and facilitate collaboration between these players. The idea of the Kubeflow open source project is to leverage the Kubernetes ecosystem, which offers well-known and proven tools and execution environment. The handover from stage to stage is facilitated, and the team can easily develop, test, validate and deploy new versions of the model. Because the models and all their components are within Kubernetes containers, they are extremely portable and scalable. While still new, Kubeflow is quickly becoming a promising option in the emerging MLOps market.

- Looker, Adopt

Looker is a BI and data analysis tool for data modelling, transformation and visualisation. It is built on its own language, LookML, which allows the creation of data models without the need for SQL. Centralised on the platform, these models can then be shared, facilitating the harmonisation of data usage and governance within the organisation. Finally, Looker does not store data permanently and relies on the power of the underlying databases, guaranteeing its performance. These particularities distinguish it from its many competitors and make it a very rich tool. Looker is suitable for all organisations, but it nevertheless requires a degree of maturity. Acquired by Google in 2019, it has become the primary reporting tool of GCP, where it is now integrated.

- Microsoft Power BI, Adopt

Power BI, a Microsoft’s business intelligence and data visualisation solution, allows users to create dynamic and interactive reports and dashboards from the many data sources for which it offers standard connectors. Inexpensive, since its basic functionalities are included in Microsoft 365, Power BI is also very easy to learn because it uses the familiar principles of Microsoft tools. For these two reasons, it promotes the democratisation of data use, particularly in SMEs, while offering advanced governance and security features to control usage. For several years, Gartner has positioned Power BI as the undeniable leader in data visualisation, where Tableau appears to be its only real competitor.

- Qlik, Adopt

Founded in 1993, and positioned by Gartner for 11 years as a leader in the sector, Qlik is one of the pioneers of in-memory Business Intelligence. The provider, which has developed its offer technically and functionally over the years, now offers a complete SaaS data platform that includes everything from data integration and extraction to exploitation in the form of alerts, graphics and reports. Qlik is distinguished in particular by its in-memory mode of operation, which increases performance, and by very user-friendly, easy-to-use tools that promote the democratisation of data and collaboration around data throughout the organisation, regardless of its size or sector of activity. They are also inexpensive solutions, making them easy and safe to try.

- Snowflake, Adopt

By combining data warehouse, data lake, data engineering, data science and data sharing services, Snowflake spans the entire space between data sources and end users. Natively multi-cloud, multi-geography and disarmingly easy, the Snowflake platform breaks down both the organisational and technical barriers of traditional data infrastructures to create a one-stop shop for data and democratises its use in the enterprise. Snowflake enables organisations in particular that have an international, complex data environment, or conversely, those that lack IT and DBA resources to exploit their data easily and quickly. In all instances, the solution is very easy to test and assess its relevance and potential. Nowadays, more than 5,000 companies have been convinced and Snowflake is growing by more than 100%.

- Tableau, Adopt

Tableau is a self-service data visualisation tool that allows anyone to create and share interactive dashboards without code or laborious operations. Tableau promotes the spread of data within organisations, making them more transparent and data-driven. Competing with Microsoft’s PowerBI, Tableau stands out for its ease of use, its ease of integration and its flexibility, all of which make it a solution within reach of all companies, regardless of their size and activity. Tableau is now looking to expand its functionality into data preparation (Prep Builder) and analytics (Ask Data, Explain Data). In 2019, Tableau was acquired by Salesforce, one of Devoteam’s strategic partners.

- Talend, Adopt

Talend, a long-time leader in data integration, has reinvented itself in the cloud era by making data quality its new workhorse. To ensure that users have healthy, compliant and usable data, the firm offers Talend Data Fabric, a platform that combines integration, governance and data quality. The cornerstone of this approach, the Trust Score, is a synthetic indicator of data reliability that Talend’s tools can calculate and, above all, improve. To consolidate its position at the heart of the enterprise data ecosystem, Talend can also rely on its technological partnerships with the major cloud operators (AWS, Azure, GCP) as well as with leading analytics players (Snowflake, Databricks, Cloudera).